I will use OVHcloud for the explanation, but it should be quite similar for any other provider.

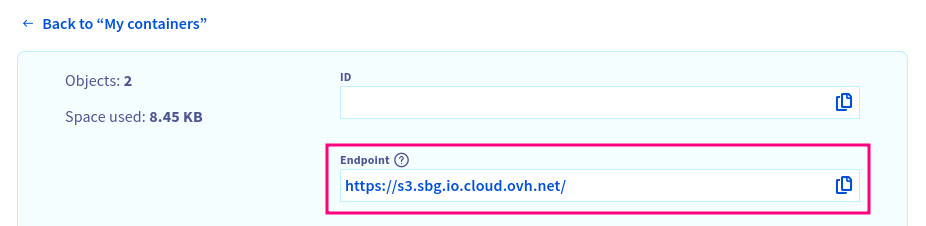

First, your provider should give you a URL to connect to their S3 API and a region. In the case of OVHcloud you will find it inside the container under Endpoint.

Then, you should create an access key/secret key pair to connect to this API. In OVHcloud there is a tab called S3 users on the Object Storage landing page.

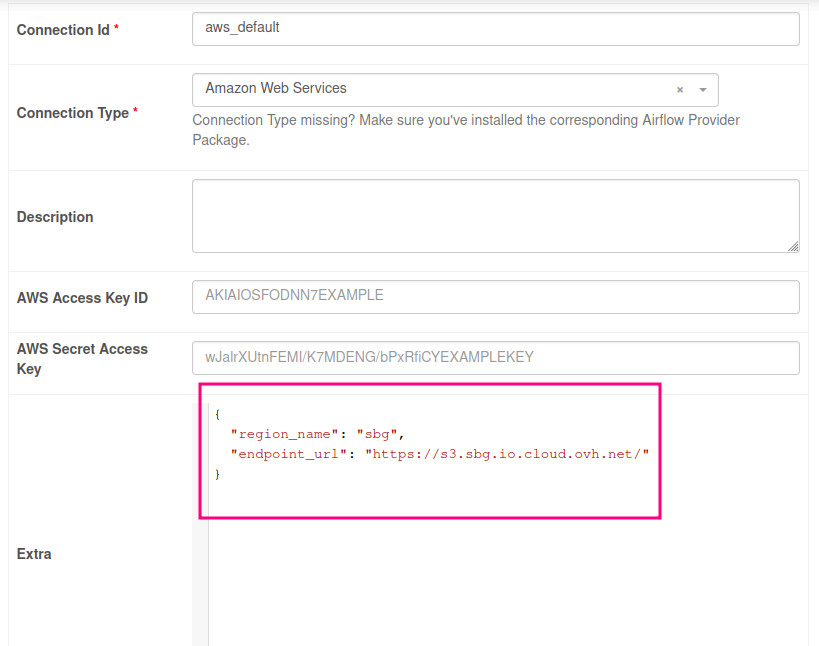

With this information, open Airflow and go to Admin > Connections. You can use the existing default connection (aws_default) or you can create a new one. Edit it and put the following under Extra (docs):

{

"region_name": "<Your region>",

"endpoint_url": "<Your provider's S3 API URL>"

}

[!warning]

Do not forget to set the

region_nameor writing to S3 may work but reading from S3 may not:with document.open("rb") as file: # This was not working for me until I set region_name. It kept failing with: # botocore.exceptions.ClientError: An error occurred (400) when calling the HeadObject # operation: Bad Request

And that should be enough for the tutorial to work with your non-AWS Object Storage.

I haven’t tried this yet, but optionally you should also be able to set the URL only for the S3 service by using something like (docs):

{

"region_name": "<Your region>",

"service_config": {

"s3": {

"endpoint_url": "<Your provider's S3 API URL>"

}

}

}